Building a Multi-Agent Stock Analysis System - POC

Discover how strategic AI orchestration solves a real investor problem: turning 4 hours of scattered research into 12 minutes of comprehensive analysis. Explore the multi-agent architecture that proves matching models to tasks delivers actionable insights faster than any single "best" model.

11/8/202511 min read

The Problem I Couldn't Ignore

Like many of you, I manage my own investment portfolio. And like many of you, I've felt that familiar frustration: information overload paired with analysis paralysis.

Before making any investment decision, I'd spend hours jumping between platforms—reading SEC filings on EDGAR, scrolling through financial news on Bloomberg, analyzing charts on TradingView, checking fundamentals on Yahoo Finance. By the time I'd synthesized everything, the market had already moved, or I'd second-guessed myself into inaction.

The irony wasn't lost on me: I work in tech, I understand AI tools, yet here I was, manually doing what felt like it should be automatable. The question kept nagging: Could AI agents actually help, or was this just another overhyped use case?

Turns out, agentic AI systems excel at exactly this kind of work—gathering scattered information, analyzing it through different lenses, and synthesizing insights. So I built one.

Why Individual Investors Struggle (And Why I Did Too)

Let's be honest about what stock research actually requires:

Keeping track of news sentiment across dozens of sources, understanding how it relates to broader market conditions, the company's sector, and macroeconomic trends.

Deciphering technical charts without a background in technical analysis (What is a MACD crossover, anyway?).

Reading 200-page SEC filings to extract what actually matters—management's outlook, risk factors, changes in fundamentals.

Time. Even if you're financially literate, this takes hours per stock.

The result? Most individual investors (myself included) end up making decisions based on gut feeling, incomplete information, or both. We're not timing the market badly—we're just not equipped with the right information at the right time.

I wanted to change that.

The Solution: A Multi-Agent Research Team

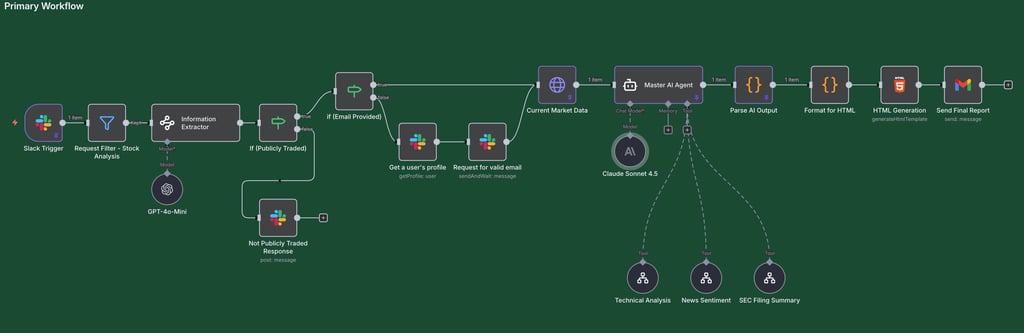

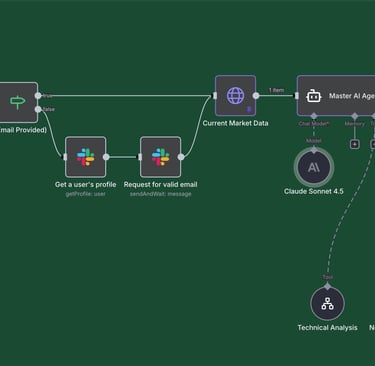

I built what I call a "Financial Research Agent"—though really, it's more like a team of specialized AI agents, each handling what they do best.

Here's how it works: You ask the system to analyze a company (say, "analyze NVIDIA"), and a Master AI Agent orchestrates the entire research process. It gathers company information, current market data, and coordinates three specialized sub-agents:

The Agent Team:

Technical Analysis Agent → Analyzes price charts, identifies patterns, calculates support/resistance levels, interprets MACD signals

News Summary Agent → Reads through dozens of news articles, summarizes each one, captures sentiment, identifies themes

SEC Filing Agent → Digests the latest 10-K and 10-Q reports, extracts key financials, summarizes management commentary, flags risks.

These specialized agents do their work independently, then report back to the Master Agent, which synthesizes everything into a comprehensive investment report delivered straight to your inbox.

Think of it like having a research team where one person handles technicals, another monitors news, another reads SEC filings—except this team works in minutes, not days.

The Architecture: Why Different Agents Need Different Brains

Here's where it gets interesting, and where I learned that not all AI models are created equal. The temptation when building something like this is to pick "the best" AI model and use it everywhere. But after testing and comparing benchmarks, I realized that's like hiring a neurosurgeon to do your taxes—technically capable, but wastefully expensive and not optimally skilled for the task.

Each agent needed to be matched to a model based on what it actually does. Here's what I learned:

1. Information Extractor: GPT-4o-mini

What it does: Takes your natural language query ("analyze Tesla") and extracts the company name, identifies the ticker symbol (TSLA), looks up the CIK number, determines sector and industry.

Why GPT-4o-mini? This is pure structured data extraction. GPT-4o-mini provides reliable structured output with schema validation, meaning it will consistently return properly formatted data with minimal parsing errors. Plus, it's 20-25x cheaper than premium models like Claude Sonnet 4.5 ($0.15/M vs $3.00/M for input tokens) according to pricing comparisons. Same quality, 96% cost savings. Why pay more?

2. Technical Chart Analysis: GPT-5

What it does: Analyzes stock chart images to identify technical patterns (head and shoulders, double tops), calculates support/resistance levels, interprets indicators like MACD and RSI.

Why GPT-5? Vision benchmarks demonstrate GPT-5's superiority for visual analysis. GPT-5 achieved 84.2% on MMMU (Massive Multi-discipline Multimodal Understanding) benchmark, making it ideal for analyzing charts, UI mockups, or video summaries. Additionally, GPT-5's responses are approximately 45% less likely to contain factual errors than GPT-4o, and when using thinking mode, GPT-5's responses are approximately 80% less likely to contain factual errors. This Matters for Chart Analysis because When analyzing technical charts, accuracy is paramount. A misread support level or incorrectly identified pattern could lead to poor investment decisions. GPT-5's superior visual reasoning capabilities and dramatically reduced error rate make it the optimal choice for extracting precise information from stock charts—support/resistance levels, trend directions, and technical indicators.

3. Article Summary Agent: Claude Sonnet 4.5

What it does: Takes each individual news article about the company and produces a detailed summary while capturing the sentiment (Positive/Neutral/Negative).

Why Claude Sonnet 4.5? This is where quality matters more than cost. Claude achieved 90% reliability on summarization benchmarks vs GPT-4's 78% according to research on the FABLES dataset. That 12% difference is critical when subtle sentiment shifts matter.

Is an article "cautiously optimistic" or truly "neutral"? Did management "express concerns" or "remain confident despite headwinds"? Claude's superior nuance detection captures these distinctions that drive investment decisions. Its 200K context window also means it never truncates lengthy financial articles—you get the complete picture. The premium cost is justified: these summaries feed your final investment decision.

4. News Sentiment Generator: Gemini 2.5 Pro

What it does: Takes all the individual article summaries from Claude and synthesizes them into one comprehensive sentiment analysis with proper citations.

Why Gemini 2.5 Pro? After Claude produces high-quality individual summaries, you need to aggregate 20-50 of them. Gemini's 2M token context window handles this effortlessly—you could process 100+ article summaries simultaneously if needed according to Google's official documentation. It is also 52% cheaper than Claude for aggregation tasks ($0.058 vs $0.120 per analysis). The model excels at multi-source citation handling, making it ideal for aggregating information from multiple article summaries.

This two-stage approach (Claude for individual quality → Gemini for cost-effective aggregation) gives you the best of both worlds.

5. SEC Filing Summary Agent: Gemini 2.5 Pro

What it does: Analyzes the company's latest 10-K and 10-Q filings, extracting financial performance, management commentary (MD&A), risk factors, and accounting notes.

Why Gemini 2.5 Pro? Here's a hard constraint: most large-cap companies produce SEC filings that exceed 200K tokens. Combined with a 10-Q, you're looking at 200K-280K tokens. Claude's context window? 200K tokens. Not enough.

Gemini's 2M token context window isn't a luxury—it's a requirement. It's the only model that can process complete SEC filings in one pass without chunking (which risks losing context at boundaries). Also, 3x cheaper than Claude for large documents. When you must use it anyway due to token limits, the cost advantage is a bonus.

6. Master AI Agent: Claude Sonnet 4.5

What it does: Orchestrates all the sub-agents, receives their outputs, and synthesizes everything into a final investment report with technical analysis, news sentiment, fundamental analysis, and a clear BUY/HOLD/SELL recommendation.

Why Claude Sonnet 4.5? Three quantifiable reasons based on independent benchmarks:

Strong agentic tool use performance demonstrated on TAU-bench according to multiple independent evaluations, meaning it reliably coordinates multiple agents without errors

55.3% on Finance Agent benchmark vs Gemini's 29.4% according to Fintool's analysis—nearly 2x better at financial reasoning

82% on SWE-bench for extended agentic coding tasks according to Anthropic's official announcement, demonstrating sustained performance on long-running complex workflows.

The Master Agent needs to understand financial concepts, maintain context across multiple data sources, and produce structured, well-reasoned reports. Claude's superior financial analysis capability makes it irreplaceable for this synthesis role.

The Technical Stack

Workflow Engine: n8n (self-hosted)

Data Sources:

Alpha Vantage → Real-time market data and news sentiment

chart-img.com → Technical chart generation

sec-api.io → SEC filing search and download

Airtable → Filing storage and caching

How It Actually Works: A Real Example

Let's say I want to analyze NVIDIA before an earnings report:

Step 1: Information Extraction (GPT-4o-mini, ~2 seconds)

Input: "Do nvidia stock analysis"

Output: {

ticker: "NVDA",

company: "NVIDIA Corporation",

CIK: "0001045810",

sector: "Information Technology",

industry: "Semiconductors & Semiconductor Equipment"

}

Step 2: Parallel Agent Execution

The Master Agent triggers three sub-workflows simultaneously:

→ Technical Analysis (GPT-5, ~60 seconds)

Fetches 6-month chart from chart-img.com

Analyzes current trend (bullish/bearish)

Identifies support levels ($520, $485, $450)

Identifies resistance levels ($580, $620)

Interprets MACD crossover signals

Assesses volume correlation

→ News Analysis Pipeline (Claude + Gemini, ~4 minutes)

Phase 1: Individual Summaries (Claude Sonnet 4.5)

Fetches 50 most recent articles via Alpha Vantage API

Claude analyzes each article individually:

Generates 500-word summary

Assigns sentiment (Positive/Neutral/Negative)

Identifies key catalysts and risks

Phase 2: Aggregation (Gemini 2.5 Pro)

Takes all 50 summaries as input

Synthesizes into comprehensive overview

Determines overall sentiment distribution

Identifies recurring themes

Properly cites all sources with URLs

→ SEC Filing Analysis (Gemini 2.5 Pro, ~4 minutes)

Checks Airtable for cached 10-K/10-Q

If missing, fetches from SEC EDGAR via sec-api.io

Analyzes both documents (combined 230K tokens):

Revenue trends and margin analysis

Management's Discussion & Analysis (MD&A)

Risk factors (new and recurring)

Cash flow and debt analysis

Notes to financial statements

Produces 7-10 paragraph structured summary with citations

Step 3: Master Synthesis (Claude Sonnet 4.5, ~2 minutes)

Claude receives:

Technical analysis output (2K tokens)

News sentiment summary (3K tokens)

SEC filing analysis (8K tokens)

Current market data (500 tokens)

It produces a comprehensive report containing:

Executive Summary → Company overview, current snapshot, key opportunities/risks

Price & Market Snapshot → Current price vs previous close, daily movement, interpretation

Technical Analysis → Trend direction, momentum, support/resistance, buy/sell zones

Fundamental Analysis → Financial performance, MD&A insights, risk factors, accounting notes

News Sentiment → Overall sentiment, themes, positive/negative developments

Sector Comparison → Position relative to industry peers (based only on filing/news data)

Forward Outlook → Short-term (0-6mo), medium-term (6-24mo), long-term (5yr+) perspectives

Investment Recommendation → Clear BUY/HOLD/SELL with detailed rationale

Citations → All sources from technical, news, and SEC analysis

Total time: ~10-12 minutes

Total cost: ~$1.43

The report gets emailed to me as a formatted HTML document.

The Economics: Why This Actually Makes Sense

Let's talk about cost—because if this was expensive, it wouldn't be practical.

Cost per company analysis:

Compare this to:

Financial advisor fees (1-2% AUM annually)

Premium research subscriptions ($50-200/month for limited coverage)

Your time (hours per stock × opportunity cost)

The cost efficiency comes from strategic model selection—using expensive models only where their capabilities truly matter, and cost-effective models where they perform equally well.

What I Learned Building This

1. Agentic AI is about specialization, not capability

I initially tried using Claude Sonnet 4.5 for everything. It worked, but cost $4-5 per analysis. Realizing that GPT-4o-mini could handle information extraction just as well for 20x less money was a revelation. Match the model to the task, not the task to your favorite model.

2. Two-stage processing often beats single-stage

Using Claude for individual article summaries, then Gemini for aggregation, produces better results than using either model alone. Claude's superior summarization quality (90% vs 78% reliability) feeds Gemini's cost-effective synthesis. The whole is greater than the sum of the parts.

3. Context windows are non-negotiable for some tasks

You can't "chunk" an SEC filing without losing critical context. When a single 10-K is 170K tokens, you need a model with a 200K+ context window. Period. This isn't about preference—it's about physical requirements.

4. Prompt engineering is 50% of the solution

Each agent gets a carefully structured prompt:

Role: Define the context ("You are a financial analyst...")

Responsibilities: What the agent needs to accomplish

Instructions: Additional context and considerations

Rules: Hard constraints (e.g., "Use ONLY provided data, DO NOT hallucinate")

Output Format: Exact JSON structure expected

This structure, combined with explicit anti-hallucination instructions, dramatically improves reliability.

5. Benchmarks matter more than hype

I chose models based on quantifiable performance metrics, not marketing claims. When GPT-5 scored 84.2% on MMMU for visual analysis with 45-80% fewer errors than GPT-4o, those numbers were the deciding factor. Real benchmarks beat vibes every time.

6. Minimizing Hallucinations

AI models sometimes "fill in" missing data with plausible-sounding but incorrect information. I gave explicit guardrails in every agent prompt to reduce hallucinations:

"Use ONLY values provided by upstream tools"

"DO NOT use external knowledge or assumptions"

"If data is unknown → return 'not provided'"

"ALWAYS include citations exactly as returned"

These rules, combined with structured output formats, dramatically reduce hallucinations.

Real Results: What This Actually Delivers

After using this system for some time, here's what I've found:

Speed: 10-12 minutes per complete analysis vs 6-8 hours manually

Consistency: Every stock gets the same depth of analysis—no shortcuts when I'm tired or busy

Coverage: I can now monitor 50+ stocks in my watchlist without it consuming my life

Confidence: Decisions backed by comprehensive analysis of technicals, news, and fundamentals

Cost: $1.42 per analysis vs $50-200/month for limited subscription services

Most importantly, it combines quantitative (technical indicators, financial metrics) and qualitative (news sentiment, management commentary, risk assessment) insights that would require reading hundreds of pages manually.

Possible Functional Enhancements

This is a proof of concept that demonstrates the viability of agentic AI for financial analysis. For a production-grade application, several enhancements could significantly improve accuracy and utility:

Portfolio Context Integration Access to current holdings would allow the system to assess how a new investment affects overall portfolio diversity and risk profile. "Does adding more semiconductor exposure align with my goals?"

Personalized Expertise Levels Asking users about their financial literacy would enable tailored reports—more educational for beginners, more concise for experienced investors.

Investment Horizon Awareness Allowing users to specify their timeframe (day trading, swing trading, long-term investing) would enable the system to weight different factors appropriately.

Real-Time Alerts Push notifications when news sentiment shifts dramatically or technical indicators flash signals.

PDF Report Generation Currently delivers HTML emails. Formatted PDF output would improve archiving and sharing capabilities.

Historical Sentiment Tracking Tracking sentiment evolution over time would reveal trends and sentiment reversal patterns.

I plan to explore these enhancements in a future article on transforming this proof of concept into a production-ready application with fine-tuned models.

Why This Matters for Agentic AI

If you're reading this from a tech background, you're probably wondering: Is this actually a good use case for agentic AI, or just buzzword engineering?

Fair question. Here's why I think this proof of concept demonstrates one of the better real-world applications:

Clear task decomposition: Stock analysis naturally breaks into distinct subtasks (technical, news, fundamentals), each requiring different skills.

Verifiable outputs: You can check the agent's work against source documents. This isn't generating creative fiction—it's extracting and synthesizing verifiable information.

Measurable value: Time saved and cost savings are quantifiable. The alternative (manual analysis or paid subscriptions) has clear opportunity costs.

Human-in-the-loop by design: The system makes recommendations, but humans make final decisions. It augments judgment rather than replacing it.

Economically viable: At $1.42 per analysis, this crosses the threshold from "interesting experiment" to "actually practical."

It's specialized AI agents doing what they do well—processing information faster and more consistently than humans can, while leaving judgment and decision-making to us.

As a proof of concept, it validates the approach. The next step would be fine-tuning models on financial-specific datasets and building robust production infrastructure—topics I'll explore in future articles.

Final Thoughts

Building this system taught me that the future of practical AI isn't about one superintelligent model that does everything. It's about orchestrating specialized models, each optimized for specific tasks, working together as a coherent system.

The hard parts weren't the AI models themselves—they're readily available via APIs. The hard parts were:

Figuring out which model excels at which task

Structuring prompts to minimize hallucinations

Designing workflows that combine outputs coherently

Building in proper guardrails and citation discipline

If you're thinking about building your own agentic AI system, here's my advice:

Start with a problem that's actually painful. For me, it was spending hours on stock research. For you, it might be something else entirely.

Don't use AI because it's cool—use it because it's the right tool. Sometimes a traditional script or API is better. AI shines when you need flexible interpretation, summarization, or synthesis.

Match models to tasks based on benchmarks, not hype. GPT-4o for vision, Claude for nuanced reasoning, Gemini for long context and cost efficiency. Each has strengths.

Build in verification and citations from day one. AI that makes things up isn't useful. Force it to show its work.

Measure everything. Time saved, cost per operation, accuracy rates. If you can't measure value, you can't justify the complexity.

The democratization of sophisticated AI tools means individual developers can now build systems that would have required entire teams a few years ago. This stock analysis automation is just one example.

The question isn't whether AI can do useful work—it clearly can. The question is whether we're thoughtful enough in how we apply it.

Now if you'll excuse me, I have some stocks to analyze. Fortunately, it'll only take twelve minutes.